Adaptive Compliance Policy

Learning Approximate Compliance for Diffusion Guided Control

Yifan Hou 1

Zeyi Liu1 Cheng Chi1

Eric Cousineau2 Naveen Kuppuswamy2

Siyuan Feng2 Benjamin Burchfiel2 Shuran Song1

1Stanford University 2Toyota Research Institute

Compliance plays a crucial role in manipulation, as it balances between the

concurrent control of position and force under uncertainties.

Yet compliance is often overlooked by today's visuomotor policies that solely focus on position control.

This paper introduces Adaptive Compliance Policy (ACP), a novel framework that learns to dynamically

adjust system compliance both spatially and temporally for given manipulation tasks from human

demonstrations, improving upon previous approaches that rely on pre-selected compliance parameters or

assume uniform constant stiffness.

However, computing full compliance parameters from human demonstrations is an ill-defined problem.

Instead, we estimate an approximate compliance profile with two useful properties: avoiding large

contact forces and encouraging accurate tracking.

Our approach enables robots to handle complex contact-rich manipulation tasks and achieves over 50%

performance improvement compared to state-of-the-art visuomotor policy methods.

Prior visuomotor policy typically uses a constant stiffness profile (e.g. via passive compliance), or use no compliance, which will either be sensitive to force noise/disturbances, or be prune to huge contact forces under positional uncertainties. The video below demonstrates the difference between our method and the compliant/stiff baselines.

Paper

Latest version: arXiv or here.Code & Data

Available at our Github repo.Method

Our policy predicts temporal-spatial varying stiffness, pose, and force, encoded by:

1. the reference pose (where the robot should be),

2. the virtual target pose (where the robot moves towards),

3. stiffness (of the compliant direction).

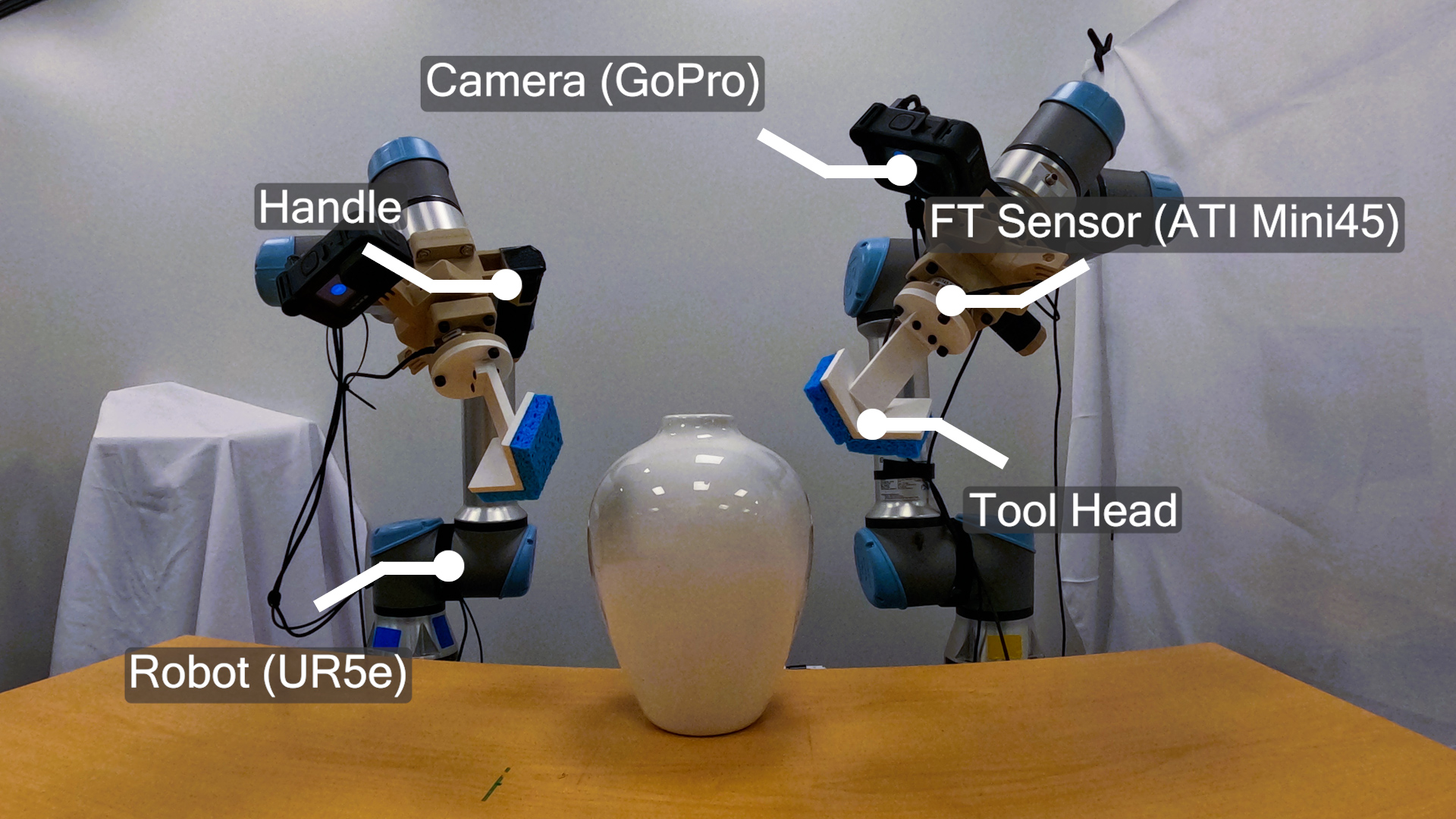

Data Collection

We designed a kinesthetic teaching system with low-stiffness, low-inertia compliance that allows the operator to demonstrate variable compliance behavior with direct haptic feedback.

Experiments

We evaluate our method in two contact-rich manipulation tasks whose success depends on the maintenance of suitable contact modes. We compare four methods:

1. ACP: our method.

2. ACP w.o. FFT: same as ACP but with force encoded using temporal convolution instead of FFT.

3. Stiff policy: Baseline I, diffusion policy with additional force input. Outputs target positions.

4. Compliant policy: Baseline II, same as the 3 except that the low level controller has a uniform stiffness k = 500N/m.

Task I: Item Flipping

The task is to flip up an item with a point finger by pivoting it against a corner of a fixture. The dataset for this task includes 230 demonstrations across 15 items. Each method is evaluated 100 times, the success rate is shown below.| Method | Training Items | Unseen Items | Push and Flip | Varied Fixture pose | Unstable Fixture | All |

|---|---|---|---|---|---|---|

| ACP | 90% | 95% | 95% | 100% | 100% | 96% |

| ACP w.o. FFT | 90% | 100% | 100% | 95% | 90% | 95% |

| Compliant Policy | 80% | 15% | 15% | 5% | 0% | 23% |

| Stiff Policy | 20% | 0% | 5% | 35% | 10% | 14% |

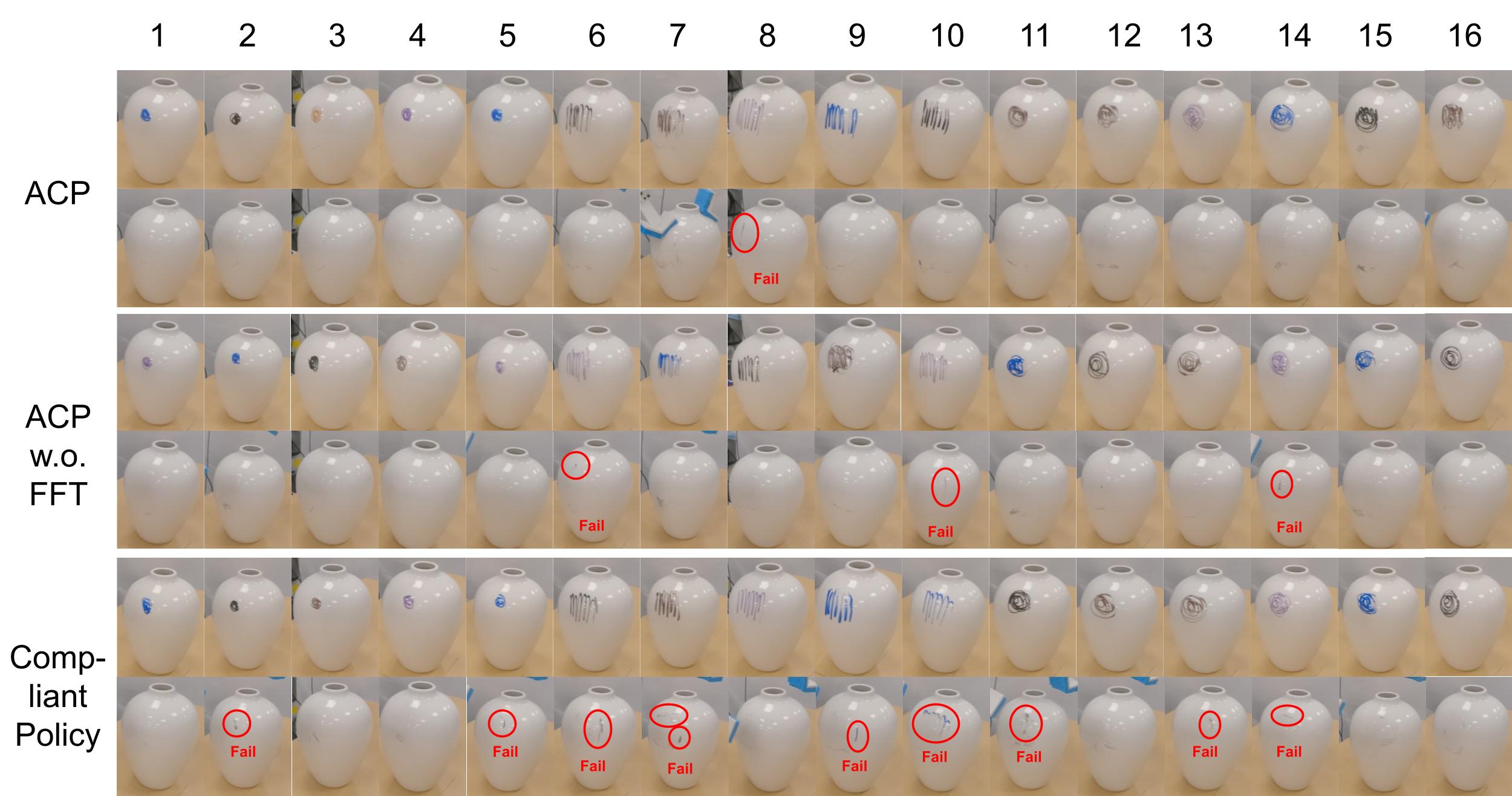

Task II: Vase Wiping

The task is to wipe off markings on a vase. The demonstrated motion uses the left arm to hold the vase while the right arm performs the wiping. We collected 200 demonstrations with various vase poses, marking shapes, and colors. Each demonstration includes one to five wipes to fully clean the markings. We ran 16 tests for each method:1~5: small marking with five different vase locations.

6~10: large marking with five different vase locations.

11~16: middle marking with perturbations.

Success is defined as wiping the vase clean within three wipes. A vase is considered clean if the remaining of the original mark is within an 1cm x 1cm region, not considering the markings left by the wipe itself.

The video above does not include the [Stiff Policy], since the policy breaks the robot hand easily. Instead, [ACP] safely engages and maintains contacts during the wipes for its compliance.

Compared with the [Compliant Policy], [ACP] maintains accurate tracking of the desired motion for being stiff in the motion directions. The wiping motion of the [Compliant Policy] deviates from the position target because it is sensitive to the friction from the vase.

Compared with [ACP w.o. FFT], our policy with the FFT encoding has better success rate and finishes the task with fewer wipes. We observed that the FFT encoding, when used together with RGB encoding via cross-attention, makes better decision on the next best wiping location.

The figure below shows the vase before and after each test.